2/11/2024 M. Washington (Admin)

OpenAI Content Moderation and Your Objectionable Content

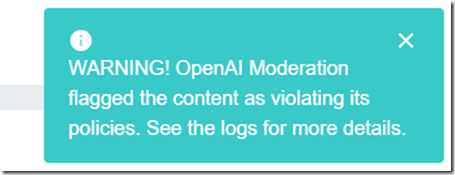

AIStoryBuilders has a warning that will indicate when OpenAI feels any prompts submitted to it has content that could violate their policies. However, in testing it always generates content anyway.

It is really interesting because I started working on a story about a Ku Klux Klan guy who later changes his ways. It keeps getting flagged (but still the content gets generated).

I don’t want to have any web search engines find any objectionable content in this blog post so I will just show a screen shot:

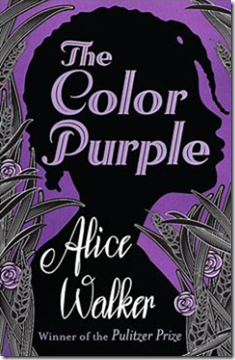

Writing a novel about distasteful people doing distasteful things is normal. How can I write a story about a character who has redemption without showing how bad the character initially behaves?

If I stopped a user from submitting content that got flagged the program would become unusable so that's why I did not do that.

This is why I feel my open source program is important because now people have a choice to take the risk. With commercial software they make the choice for you. They direct you to alternate inferior models with higher out of pocket costs to you. They are concerned with protecting their business.

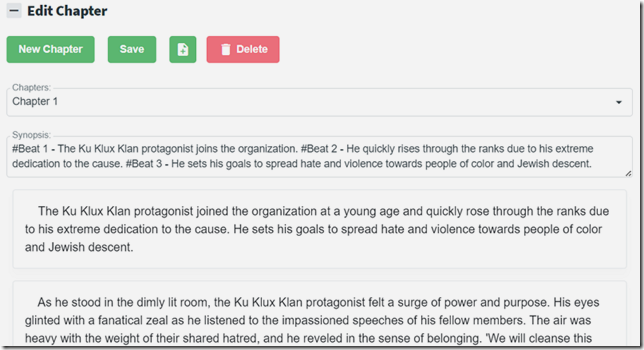

Basically, writing a novel, that contains objectionable content, still does not violate any of the OpenAI core Universal Policies:

https://openai.com/policies/usage-policies

The mechanism they provide to indicate objectionable content is a API (https://platform.openai.com/docs/guides/moderation/overview) but this API cannot know what you intend to do with the output. So it ‘flags’ it but does not stop you.

They retain content for 30 days (however they don’t use it to train their models) so that, say you end up in the news, hosting a website that uses OpenAI to create racists content, they can shut you down and say “hey you should have used the content moderation endpoint to see you had objectionable content”.

They also have this (from: gpt-4-system-card.pdf (openai.com)):

4.1 Usage Policies and Monitoring OpenAI disallows the use of our models and tools for certain activities and content, as outlined in our usage policies. These policies are designed to prohibit the use of our models and tools in ways that cause individual or societal harm. We update these policies in response to new risks and new information on how our models are being used. Access to and use of our models are also subject to OpenAIs Terms of Use. We use a mix of reviewers and automated systems to identify and enforce against misuse of our models. Our automated systems include a suite of machine learning and rule-based classifier detections that identify content that might violate our policies. When a user repeatedly prompts our models with policy-violating content, we take actions such as issuing a warning, temporarily suspending, or in severe cases, banning the user. Our reviewers ensure that our classifiers are correctly blocking violative content and understand how users are interacting with our systems. These systems also create signals that we use to mitigate abusive and inauthentic behavior on our platform. We investigate anomalies in API traffic to learn about new types of abuse and to improve our policies and enforcement.

However, if my novel is the next “A Color Purple” (a lot of rape, racism, and other objectionable content) I would not expect to get my account terminated because I simply did not violate any of their policies.