1/28/2024 M. Washington (Admin)

Why Did I Create AIStoryBuilders?

I recently wrote a book (Azure OpenAI Using C#: Exploring Microsoft Azure OpenAI and embeddings and vectors to implement Artificial Intelligence applications using C#) and I was really excited about the potential uses of AI Large Language Models.

I wanted to use ChatGPT to help me write my novel but realized that it does not handle long form content very well. You have to keep reminding it who your characters are and what they did. I realized you need a program that will keep a database of this information and feed it to the AI when asking it to generate content. I decided to create a free open source program that does that.

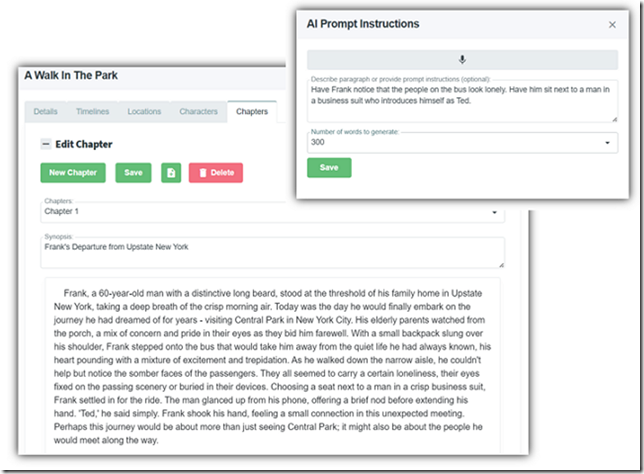

I spent months creating AIStoryBuilders and I am confident in saying that AI can only generate 300-500 words at a time of any decent quality. This requires my program to send, as a prompt, 2000-5000 words! Seriously, the AI is only able to produce quality output if you basically give it a massive amount of content and instructions.

The Research

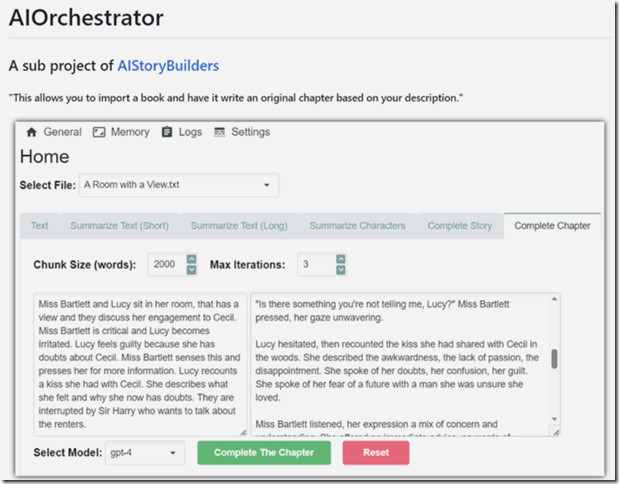

I didn’t just sit down and create AIStoryBuilders. First, I spent two months creating a proof-of-concept called AIOrchestrator. You can get that code here: https://github.com/ADefWebserver/AIOrchestrator. I also created a full write-up about AIOrchestrator here: Processing Large Amounts of Text in LLM Models.

You may not want to read the entire article so I used ChatGPT to summarize the top points:

Challenge of Limited Context: Large Language Models (LLMs) face difficulty in processing extensive texts due to their limited context window. This limitation often leads to the omission of critical details like character traits and actions.

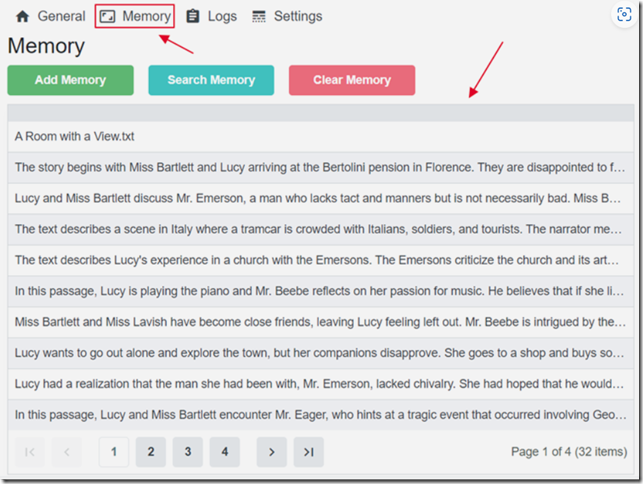

Vector Database as a Solution: To overcome this limitation, the blog introduces the use of a vector database. This approach allows for the storage of an entire book, effectively serving as an 'unlimited memory' for the LLM.

Introducing AIOrchestrator: A sample application called AIOrchestrator is showcased. It enables users to import a book and guide the LLM to write an original chapter based on specific descriptions.

Setting Up AIOrchestrator: The post provides detailed instructions for downloading, installing, and configuring AIOrchestrator, including the setup of necessary software and OpenAI keys.

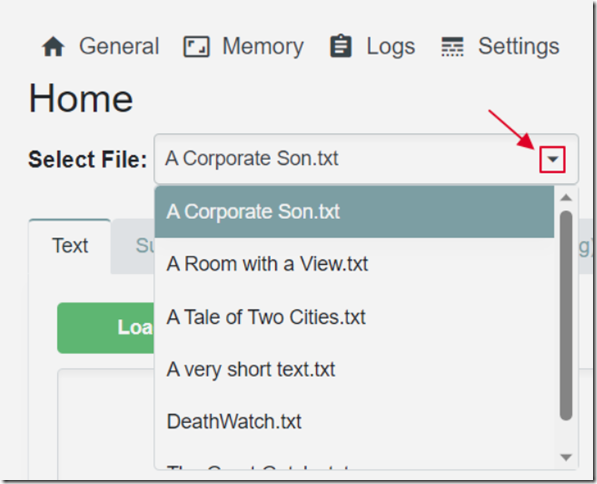

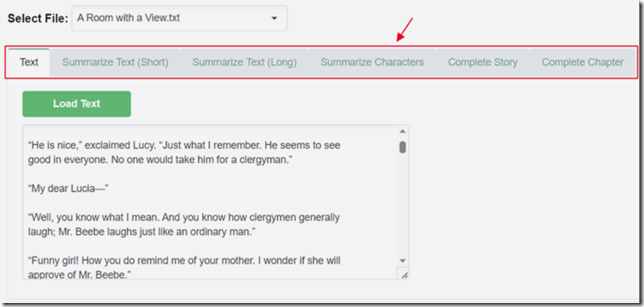

Managing Files within the App: Users can add their text stories to the application. This feature allows these texts to be selected and utilized within various functionalities of the app.

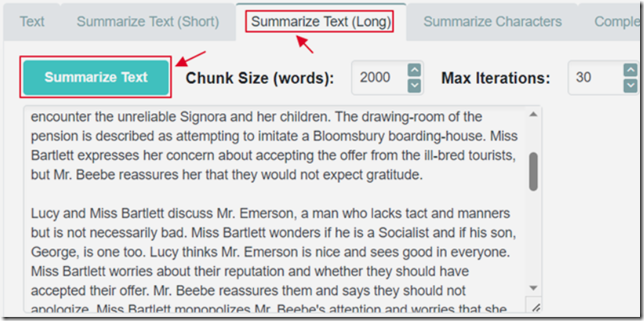

Diverse Functionalities of the App: AIOrchestrator offers multiple features such as text viewing, short and long form text summarization, character summarization, and the capability to complete stories or chapters

Memory Search Capability: The application includes a function to search within the vector database using terms converted into embeddings. This aids in finding relevant text summaries for the LLM to use.

Exploring Text Summarization Methods: The post discusses two approaches to text summarization: a short form (creating a single summary) and a long form (summarizing each text chunk separately), each with their own merits and drawbacks.

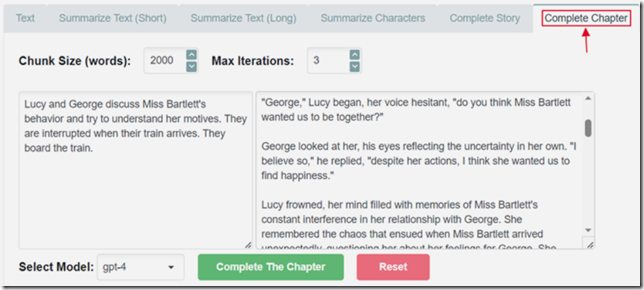

Advanced 'Complete Chapter' Feature: This specific feature of the app exemplifies processing large text amounts with LLMs. It involves choosing text chunk sizes and storing summarized sections in a vector database for efficient retrieval and use.

Finally Understanding The Problem

The end result of all that brought me to the following conclusions:

- AI Large Language Models like ChatGPT are really bad at handling long form content - I mean REALLY bad

- The only way to get a Large Language Model like ChatGPT to generate decent content is to provide it a massive amount of content (this is called grounding)

- Using a vector database is the best way to find relevant content to feed to the AI

- Even when you provide this massive amount of content, the AI is only able to generate 300-500 words of any decent quality at a time

When I completed AIOrchestrator I was still faced with the following challenge:

- How do you track all the story elements like characters and locations and their progressive changes?

Remember, I have now concluded that I must feed the AI everything it needs to know about the story for each paragraph it writes.

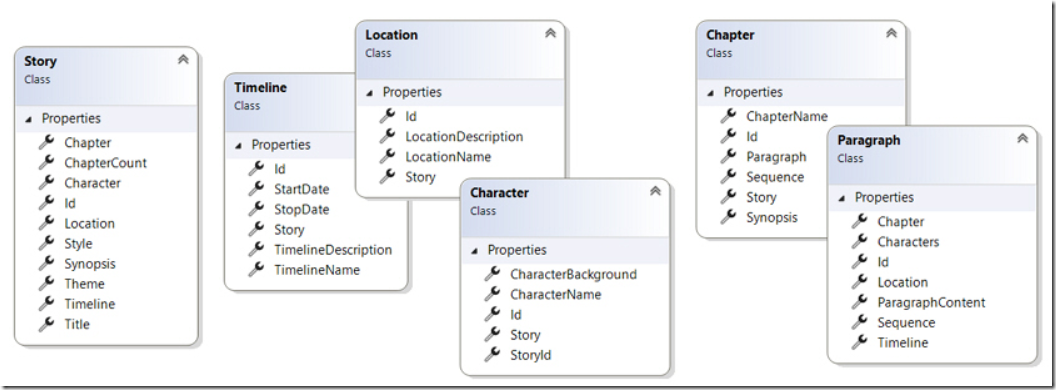

The Database

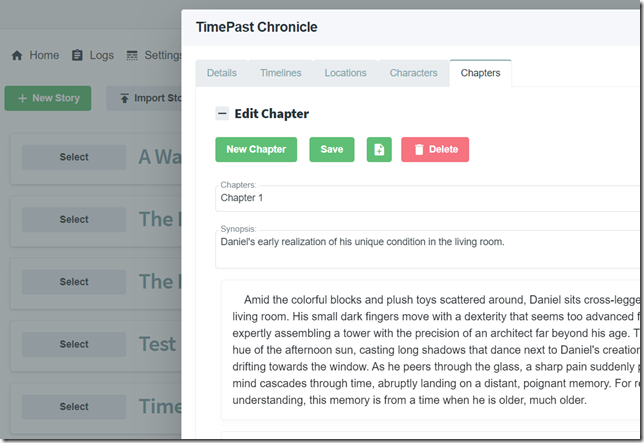

I needed to create a database, and a program that would allow you to manage all that information. The design of the database is very important because it determines what the program can do. It was at this point that I had to draw upon my years of writing long form fiction to ensure it would capture the important elements of any story.

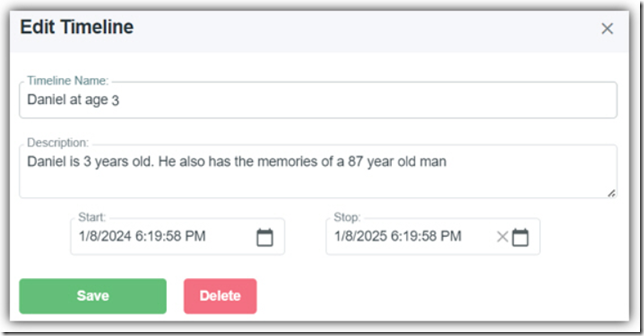

Timelines

The key to the database, and the aspect that I think many other applications omit is timelines. Timelines come before characters or locations, because the characters and locations in a story exist on a specific timeline in the story. Therefore, timelines form the foundation of the story element database.

This is the only way to track the progressive changes of all the story elements like characters and locations and handle things like flashbacks.

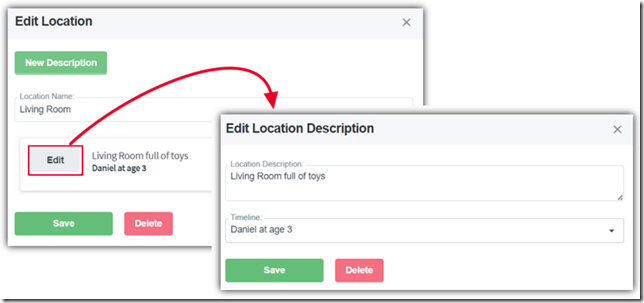

Locations

Next is locations. These come before characters because every reference to a character takes place at a location, therefore the database of locations must first exist. Locations also have different descriptions and attributes based on timelines.

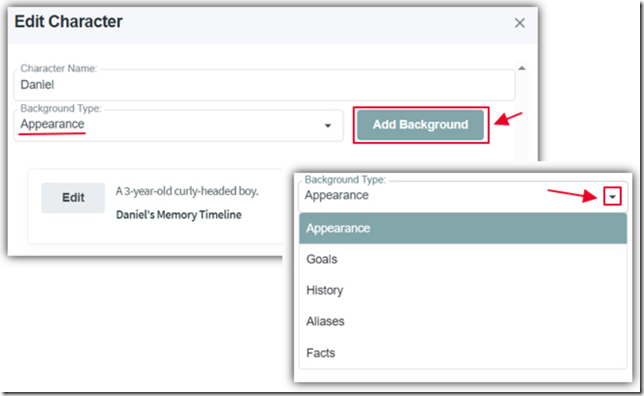

Characters

Characters are the most important part of any story so the database tracks a large number of elements for the characters including:

- Appearance - Anything that describes how a character looks. For example tall or blonde.

- Goals - Things that the character wants to do, for example, to open a restaurant.

- History - Important things about the character that don't happen in the story but are referred to in the story.

- Aliases - Other names the character is known by, for example Mom.

- Facts - Important things about the character that can explain a character's motivation. For example the character has cancer.

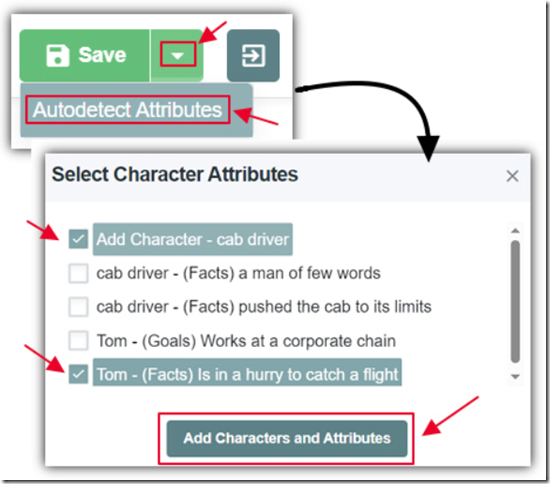

Auto detection

It is far too much work to expect an author to fill the database with all the information before writing prose. Therefore, a key feature of AIStoryBuilders is that the program can compare the elements in the current paragraph to elements already in the database and allows you to automatically update the database.

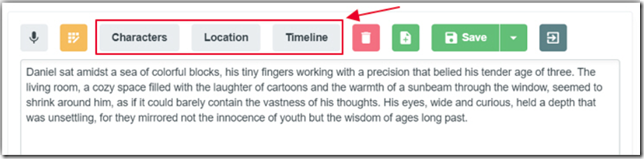

Every Paragraph Is Special

I cannot overemphasize the importance of understanding that the AI can only generate acceptable content one paragraph at a time (about 300-500 words). Therefore, it is crucial to keep track of all elements, such as characters, locations, and timelines, on a per-paragraph basis.

This approach allows you to adjust the timeline for a paragraph that, for example, contains a flashback. The attributes for the characters and locations will now include only those pertinent to that timeline. When generating AI content for a specific paragraph, only the relevant information will be sent to the large language model. This ensures that the content produced by the AI is pertinent and coherent within that context.

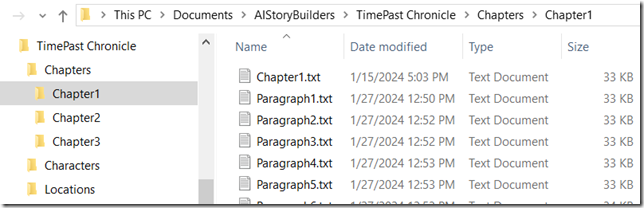

Using Text Files To Store The Data

Another interesting challenge was “what format should I use to store the data?”. If I created AIStoryBuiders as a web application I would have used a normal SQL cloud database. However, that would cost money to maintain and I would have had to charge money for AIStoryBuiders and I didn’t want to do that. I decided to make it a Microsoft Windows desktop application and that limited the database options. I realized that the simple text files I used in the proof-of-concept AIOrchestrator application provided great performance so I decided to stick with them.

How safe is your data?

If the company that has your data goes out of business you would lose all your data! This is a very, very, bad thing. I did not want that to happen to people so my decision to store the database in easy to read text files, on the user’s computer, I feel is the safest and best situation for the users of my program.

Anatomy Of A Prompt

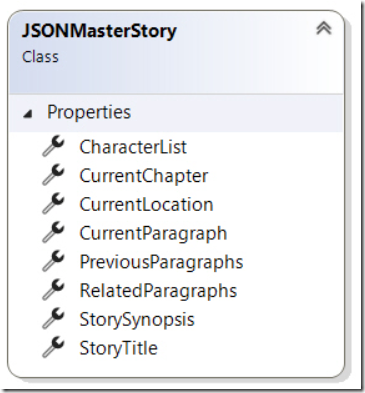

Now that you have all the important elements of your story in this huge database, how do you selectively send the right information to the AI at the right time?

Every time you hit the AI button to create new content, or ask AIStoryBuilders to alter your existing content, a lot happens. Essentially it creates a Master Story Object and sends this to the Large Language Model. You can get the details here: https://documentation.aistorybuilders.com/AnatomyOfAPrompt.html.

However, again I used ChatGPT to summarize the main points:

-

System Message: This section specifies persistent instructions for the OpenAI model, such as stylistic guidelines, avoiding repetitive manual input for each paragraph creation.

-

Story Title: The story's title, inputted on the Details tab, provides the AI with an essential thematic overview of the story.

-

Story Style: Selection of a specific style, like Drama or Sci-Fi, guides the AI's writing approach, aligning with the chosen genre's conventions.

-

Story Synopsis: A general overview of the story, inputted on the Details tab, offers context to the AI without being overly detailed, aiding in paragraph creation.

-

Current Chapter: Information in the Chapter Synopsis assists the AI in understanding the chapter's focus, balancing detail with the broader story context.

-

Current Location: Details about the paragraph's location, including timeline-specific attributes, enhance the AI's contextual understanding for paragraph development.

-

Character List: Information on characters and their timeline-specific attributes for the current paragraph section informs the AI's portrayal of these characters.

-

Previous Paragraphs: Essential for continuity, this includes preceding content to guide AIStoryBuilders in crafting subsequent paragraphs, especially at the chapter's start.

-

Related Paragraphs: Utilizes a vector search of previous chapters to include top related paragraphs, providing the AI with comprehensive background for current content creation.

-

Current Paragraph: The AI either creates new content following the previous section or rewrites existing content based on provided instructions and contextual elements.

But, It Works!

The good news is that it works. You can build up the unique proprietary database, and you ask the program to generate or alter your prose, and it produces relevant coherent content.

It is all I could ask for ![]()

Links

- Articles

- Github